Human-AI Interaction

Research Staff

-

Professor

Sakriani SAKTI -

Associate Professor

Hiroki OUCHI -

Assistant Professor

Faisal MEHMOOD -

Assistant Professor

Bagus Tris ATMAJA

Research Areas

The Human-AI Interaction (HAI) Laboratory pursues research to enhance communication and collaboration between humans and artificial intelligence. This includes exploring speech, text, and image interactions, as well as the interplay between language and paralanguage. Using cutting-edge AI technologies like deep learning, our aim is to achieve successful synergy between humans and machines for a future of collaborative intelligence.

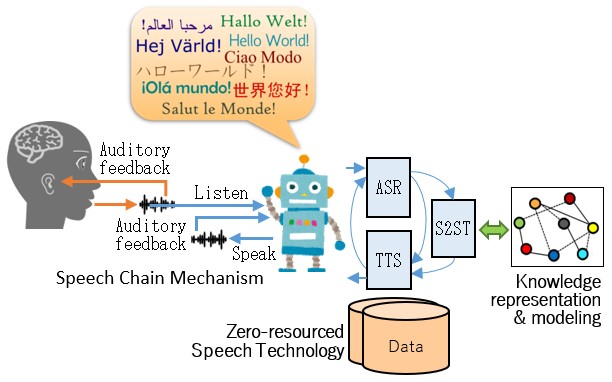

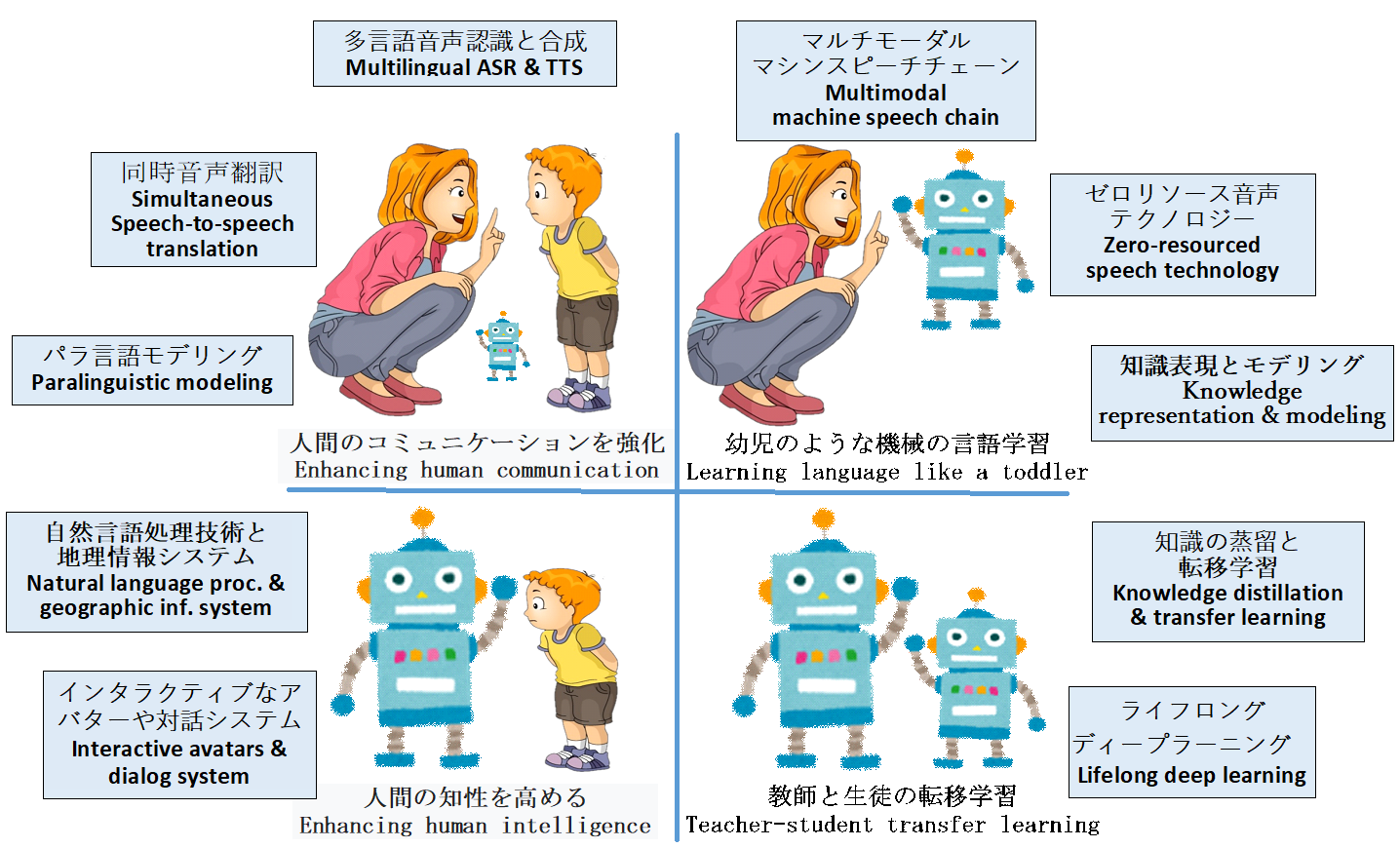

Multisensory Communicative AI

Natural communication involves not only auditory and visual sensory systems but also their interaction. The research focuses on developing technologies enabling communication mechanisms to listen, speak, and listen while speaking and visualizing. This includes advancements in automatic speech recognition (ASR), text-to-speech synthesis (TTS), image retrieval (IR), image generation (IG), and machine speech chains in a multilingual, multimodal, multi-talker fashion. This encompasses linguistic code-switching, emotional nuances, spontaneous conversational context, incremental processing, and dynamic adaptation.

Spatial intelligence integrated language AI

The research emphasizes the comprehensive nature of the AI system's linguistic capabilities involved in natural language processing, including real-time language understanding, context-aware language generation, and human-like simultaneous speech translation that directly translates from speech to speech in real time, covering both linguistic and paralinguistic information. Additionally, it focuses on the integration of geospatial capabilities for geographical context analysis and interpretation, thus expanding the AI system's capabilities to include not only linguistic understanding but also location-aware context comprehension.

Diverse Interactive AI

The research encompasses a wide range of technologies to enhance interaction between humans and machines. This includes developing interactive avatars and tele-existence systems, intelligent dialog systems, view-sharing systems, and integrating VR/AR technologies to create seamless and immersive interactions and collaborations. Moreover, the exploration of new interfaces and modalities contributes to a deeper understanding of human cognition and machine learning capabilities in interactive environments.

Representation Learning and Developmental AI

The research area focuses on deep learning techniques inspired by human-like learning processes. This includes zero-resourced speech technology for infant learning emulation, unsupervised/semi-supervised learning, and model complexity reduction for low-computational resources, as well as never-ending learning, self-awareness and self-improvement mechanism. Additionally, it explores transfer learning and knowledge distillation for task improvement. The research also delves into universal information representation modeling, emphasizing multimodal and multilingual capabilities for efficient processing of diverse data sources and languages by AI systems.

Key Features

We welcome students with enthusiasm and passion for human-AI interaction who are willing to actively devote themselves to research. We will focus not only on theoretical aspects but also on the practical applicability of technology. Our motto is to learn from humans and apply our results for the benefit of humanity.