Cybernetics and Reality Engineering

Manipulate reality to augment human capabilities

Research Staff

-

Professor

Kiyoshi KIYOKAWA -

Associate Professor

Hideaki UCHIYAMA -

Affiliate Associate Professor

Nobuchika SAKATA -

Affiliate Associate Professor

Naoya ISOYAMA -

Assistant Professor

Monica PERUSQUÍA-HERNÁNDEZ -

Assistant Professor

Yutaro HIRAO -

Affiliate Assistant Professor

Felix DOLLACK

Research Areas

Fig.1 Research fields

Fig.2: Super wide field-of-view occlusion-capable optical see-through HMD

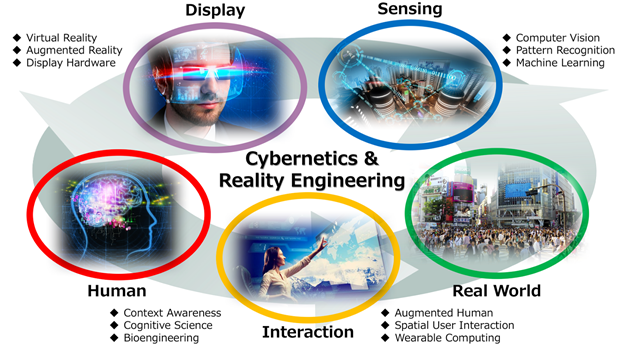

Fig.3: Gustatory manipulation by using GAN-based real-time food-to-food translation

Fig.4: Controlling interpersonal distance

Fig.5: A variety of head mounted displays

Humans have acquired new capabilities by inventing various tools long before computers came up and mastering them as if they were part of the body. In this laboratory, we conduct research to create "tools of the future" by making full use of virtual reality (VR), augmented reality (AR), mixed reality (MR), human and environmental sensing, sensory representation, wearable computing, context awareness, machine learning, biological information processing and other technologies (Fig. 1). We aim to live more conveniently, more comfortably, or more securely by offering "personalized reality" which empathizes each person. Through such information systems, we would like to contribute to the realization of an inclusive society where all people can maximize their abilities and help each other.

Sensing: Measuring people and the environment

We are studying various sensing technologies that assess human and environmental

conditions using computer vision, pattern recognition, machine learning, etc.

- Estimation of user’s physiological and psychological state from gaze and body behavior

- HMD calibration and gaze tracking using corneal reflection images

Display: Manipulating perception

We are studying technologies, such as virtual reality and augmented reality, to freely manipulate and modulate various sensations such as vision and auditory, their effects, and their display hardware.

- Super wide field of view occlusion-capable see-through HMD (Fig. 2)

- Gustatory manipulation by GAN-based food-to-food translation (Fig. 3)

- Tendon vibration to increase vision-induced kinesthetic illusions in VR

- A non-grounded and encountered-type haptic display using a drone

Interaction: Creating and using tools

We combine sensing and technologies to study new ways of interaction between human and human, and human and the environment.

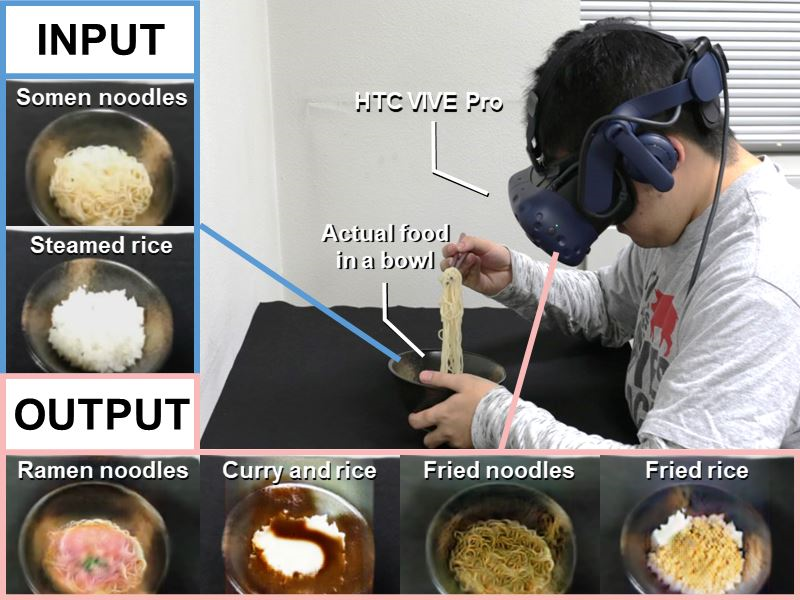

- Controlling interpersonal distance by a depth sensor and a video see-through HMD (Fig. 4)

- Investigation on priming effects of visual information on wearable displays

- AR pet recognizing people and the environment and having own emotions

Research Equipment

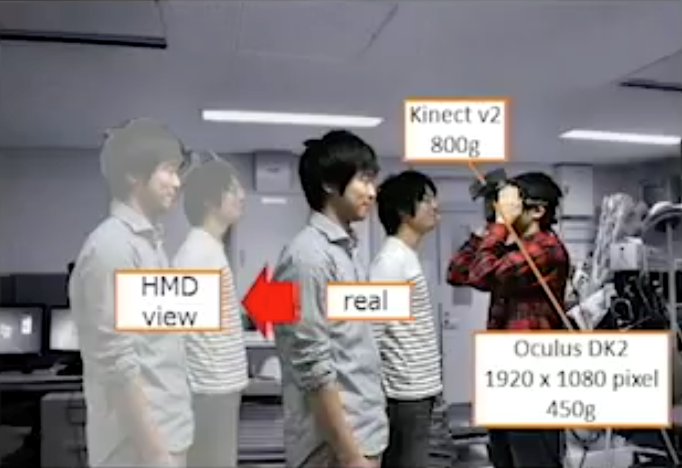

- A variety of head mounted displays (Fig. 5)

Research Grants, Collaborations, Social Services etc. (2019)

- MEXT Grants-in-Aid (Kakenhi) (A x 2, B x 3, C x 2), SCOPE, JASSO,etc.

- Collaboration (TIS, CyberWalker, etc.)

- Steering / Organizing Committee members of IEEE VR, ISMAR, APMAR, etc.